A fullstack Go + ReactJS App deployed with Docker

Oct 23, 2016 20:00 · 1505 words · 8 minutes read

Disclaimer: this project has changed.

Nginx has been removed among other fancy things.

Posts about updates pending…

See latest code for info Release v2.0 Drop Nginx, convert to Traefik

/Disclaimer

What?

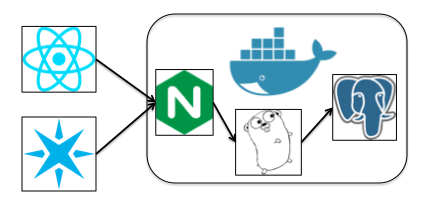

I’ve written a fullstack app: Go backend, ReactJS web frontend, deployed using Docker-Compose.

I call it Freyr, obligatory screenshots:

You can read more about the app itself on its github page. You can even view the app actually running at frey.erdmanczyk.com. In this post I’ll share an overview of the app’s design.

It’s yet another ‘arduino’ (in this case a Particle Core) plant sensor project: meausuring temperature, humidity, light, etc. with sensors and an MCU and then sending it to the cloud. Unlike many other projects, however, the cloud server is custom built.

Why?

I cover the ‘why’ of the app on the github page, but why this post?

If you’re looking to write an app in Go, there is hardly a lack of resources for learning. Be it tutorials, examples, existing libraries, etc. Go has a rich community.

My goal with this post isn’t to make a mega-tutorial covering all the how-to’s of what I did in this project, but to provide a simple example of pulling those components together into a Thing™.

Rather than an exhaustive overview of how to build this from the ground up, this will instead be a high-level overview of the app, its components, and their purposes. I’ll make some assumptions that the reader understands how the underlying technology works, but if you see something cool and want to know more feel free to comment/e-mail ☺.

How does this work?

- Deployed via docker-compose

- Nginx gateway

- ReactJS frontend

- Go backend w/ Postgres database

- Google oauth authentication, JWT managed sessions, HMAC signed API access

Deployment

Docker-compose was great because I could script my app environments. This made spinning up and tearing down the apps on the fly super easy.

Another benefit was the provider I used for this project, DigitalOcean, has a docker-machine driver. I created my VM with docker-machine, then could use the same docker client commands to deploy my app in production as I do to test on my local machine.

The repository has four Docker Compose files:

- docker-compose.yml: Production deployment, pulls all files into the images

- docker-compose.debug.yml: Rather than copying in files, mounts directories into the container to speed launching docker as well as allow live editing of things such as UI files.

- docker-compose.integration.yml: Runs integration tests, organized as unit tests that require external resources such as database connections.

- docker-compose.acceptance.yml: Runs full-path acceptance tests, performing API calls and asserting results

I used a Makefile to simplify some commonly used commands e.g. running tests, building binaries and deploying.

Nginx

My app doesn’t need any load balancing yet. Rather, at this stage I employ Nginx for other features including:

- Terminating SSL

- Redirecting HTTP -> HTTPS

- Serving static files and handling 304 ‘Content Not Modifed’ responses

- Gzipping responses when applicable

- Routing ‘/api/’ requests to the backend.

I’m admittedly not an ops or Nginx configuration expert, so I owe a lot to The Google and Stackoverflow for getting this working.

All nginx config is in the nginx/conf directory.

ReactJS Frontend (+ some D3.js)

I picked ReactJS mainly because of how simple it was to get up and going vs. other frameworks. I wanted to write Go code, I didn’t want to spend my time learning what the perfect frontend framework is. The less time for me spent on the frontend the better, but I also didn’t want to half-ass it… too badly.

All ReactJS and other static files are packed with Nginx in the nginx/static directory. I used the free grayscale bootsrap theme for CSS/layout. The background picture I actually took myself on a hike.

I use Webpack to build the app files into a single bundle.js. My development docker-compose file was handy working on this because I could set webpack to watch changes and rebuild on the fly, and updating my changes in the container.

The web page is a single-page app. All paths on Nginx not matching /api or a valid path in the content directory serve the index.html file. This allows users to return to ‘pages’ within the app courtesy of react-router’s functionality. To demonstrate try going to the demo, logging in as the demo user, navigating to a chart, and then copying and pasting the url into a new browser tab.

The app also includes charts, for which I chose d3.js. It’s easy to make fun of Javascript, but there are those who do make good things with it, and D3.js is one of those things. It took some magic to get d3 to work with React, because of how React uses a virtual DOM representation, but some code from react-faux-dom came to the rescue.

Go backend

Go powers the backend, the meat of the project, powering:

- Negotiating oauth with Google

- Issuing signed JWT’s to authenticated users

- Authorizing HMAC signed web requests for API access

- Receiving, storing, and serving sensor readings

This was the part I really cared about, by trade I consider myself a backend developer.

I won’t do the intro to ‘What Go Is™’ that seems obligatory on every blog post.

I will say I have really enjoyed programming in Go. Having a background in C, then C++, then Python working in Go has been a happy combination of what I’ve enjoyed between those worlds. It’s got some problems to fix, but it gets a lot of things satisfyingly right.

I could spend a whole couple blog posts talking about how I designed the Go backend, but instead I’ll just point at some key design features I chose writing it:

- Using interfaces to model data sources: separating concerns in code, enabling mocking for unit tests, and allowing extensible swapping of data sources.

- No global config or config loading in libraries, only loading configuration in main and passing to components via parameters, which also enables easier unit testing.

- Sticking to the standard lib as much as possible (e.g. net/http), making the code more reusable and less dependent on third party libraries.

- Using contexts in http handlers. I used the apollo library for chaining, but need to switch to instead conform to context adaptations in Go 1.7+.

One example of how I used interfaces is with the models.ReadingStore which is implemented by the DB type. When implementing handlers such as PostReadings and GetLatestReadings, the functions accept the interface type rather than DB. This allowed me to test the handlers using a mock and the httptest package in readings_test.go.

Rather than using a framework such as Gin, Echo, or even Gorilla (which actually plays very well with the stdlib) I chose to stick to the standard library as much as possible for handlers. I only used negroni to easily get a couple handy middleware’s for free. I didn’t want my project code heavily dependent on a third-party framework, lest I leave the project for a bit and come back to find the library deprecated or no longer compatible with updated language versions. This also allows easier switching between libraries that also play nice the with standard library.

Ok, what about the sensors?

I mentioned this app’s main purpose was to gather and log sensor data. I also mentioned some security features. How do those work together?

I briefly hand-waved at the ability to verify API access via signed HTTP requests. Once a user is logged in, they can access the /api/secret endpoint to get a one-time-only private secret. This can be used to access the API as their user by signing the request in a certain way. The secret can also be rotated if needed. I wrote a CLI to convenience these operations.

You may notice I named my app Freyr and CLI surtr. These are references to the Norse God and jötunn adversary at Ragnarok.

Currently the MCU I use enables registering a webhook for whenever data is posted using a provided firmware function. This webhook can send a POST request to a specified endpoint, in this case /api/reading. Unfortunately, their current functionality as of the time I was doing this work didn’t provide methods for request signing, so the best that could be done is to configure the webhook to include a signed JWT and headers with the POST request. Admittedly not the most secure method, but given the amount of free-time I had(/have) it was a compromise for now that got me going. I mean, people would look at what I’ve done already and awe themselves with my apparent amount of free-time.

Other things worth Mentioning

- Free certs with LetsEncrypt!

- Automated testing (because why not) with Travis CI

- A+ Go Report :)

- Godoc compliant

In Summary

That’s a high level overview of the app, with a little more exposition on the Go pieces. Hopefully this serves as a handy example to others working on similar projects. In the future I look to add more features to this project, such as the ability to water plants and splitting the backend into smaller microservices. All in time.